The comprehensive guide to Edge AI in IoT

Explore how Edge AI is transforming IoT by enabling real-time decision-making, reducing latency, and enhancing security—unlocking new possibilities for connected devices.

Ready to build your IoT product?

Create your Particle account and get access to:

- Discounted IoT devices

- Device management console

- Developer guides and resources

Edge AI is transforming IoT devices from data collectors into intelligent decision-makers. By processing information locally, devices are now faster, smarter, and more secure. This shift from cloud-dependent to on-device intelligence is reshaping major industries, from autonomous vehicles making split-second driving decisions to factories predicting industrial equipment maintenance needs in real-time.

And the numbers reflect this shift. The Edge AI market is valued at $14.8 billion in 2023 and is set to explode to $163 billion by 2033. This major growth is fueled by the demand for instant analytics, advancements in AI technology, and the rollout of 5G networks.

As we explore Edge AI's impact on IoT, we'll see how this technology is not only enhancing existing systems but enabling entirely new possibilities.

Understanding Edge AI

At its core, Edge AI involves deploying artificial intelligence models on edge devices, allowing them to process and analyze data where it's generated–on the device, in real time. This approach reduces the need for cloud computing, minimizing latency, bandwidth costs, and enhancing data privacy.

Edge AI relies on three critical components:

- Edge computing hardware that can process AI workloads locally:

- Microprocessor units (MPUs): the enterprise-grade solution for high-performance applications, typically integrated directly at the chip level in production systems.

- Microcontroller units (MCUs): used for simpler, power-efficient implementations, particularly in smaller-scale or low-power systems.

- Optimized AI models designed for constrained hardware, using techniques like quantization and pruning to reduce the computational load.

- Edge AI software infrastructure that enables the deployment, management, monitoring, and updating of AI models on edge devices.

These components work together as an integrated system to analyze data from various sources like connected sensors, building management systems, or enterprise networks.

In sectors where rapid response times are crucial, Edge AI provides faster, more secure, cost-effective solutions. For example, manufacturing facilities use Edge AI for detecting defects on assembly lines with computer vision, while building management systems employ it for real-time equipment health monitoring through audio classification and system data analysis.

Technical hurdles when implementing Edge AI

While the benefits of Edge AI are clear, implementing it comes with a few unique challenges.

1. Power consumption and energy efficiency

Running AI algorithms on edge devices can significantly drain their energy supply, especially when deployed in remote locations or in battery-powered setups because processing power-hungry models locally means higher energy demands. This issue becomes critical in applications like sensor networks or remote monitoring systems.

Solutions to power consumption challenges include:

- Model optimization: Techniques like quantization and pruning reduce model size and computational demands, lowering energy usage and maintaining efficiency without significantly sacrificing accuracy.

- Energy-efficient hardware: AI accelerators like Google’s Edge TPU are specifically designed to process AI tasks efficiently, consuming less power.

- Energy harvesting: Technologies that capture and convert ambient energy (e.g., solar or kinetic energy) into power for devices are also being explored to reduce dependence on external power sources.

2. Computational limitations

Edge devices typically have limited processing power and memory compared to cloud-based systems. Running resource-heavy models like large language models (LLMs) at the edge can be difficult. Devices with constrained hardware must balance performance, energy use, and memory capacity to deliver meaningful AI results.

Key strategies include:

- Use efficient model architectures: Lightweight AI models such as MobileNet and EfficientNet are specifically designed for resource-constrained environments.

- Leverage hardware accelerators: Chips like NPUs (Neural Processing Units) or FPGAs (Field-Programmable Gate Arrays) help accelerate AI workloads without overtaxing the device’s CPU.

- Hybrid approaches: Some systems implement a combination of edge and cloud computing, where the edge handles real-time processing, and more computationally intensive tasks are offloaded to the cloud when possible.

3. Data management and storage

Handling and storing vast amounts of data locally presents a challenge, especially in environments where storage capacity is limited. Edge AI devices need to be able to process, filter, and store data efficiently without overwhelming the system.

Effective data management techniques include:

- Edge analytics: Instead of transmitting raw data, edge devices can analyze and summarize data locally, only sending relevant insights to the cloud.

- Data compression: Compressing data before storage or transmission reduces the volume while maintaining the integrity of the information.

- Hierarchical storage systems: Combining fast, limited storage (e.g., SSD) for immediate data needs with slower, larger storage (e.g., SD cards) for long-term data retention.

Platforms that offer unified edge-to-cloud solutions simplify the management of power consumption, computational performance, and data by providing consistent APIs and tools across the stack. This seamless integration ensures data flows efficiently from device to cloud, improving real-time analytics and enabling scalable Edge AI applications.

The impact of 5G on Edge AI

Reliable networks are the backbone of edge computing. For edge devices to process and act on data in real-time, they require fast, stable connections. Weak or inconsistent networks lead to delays, compromising the performance of critical applications like autonomous systems or industrial automation.

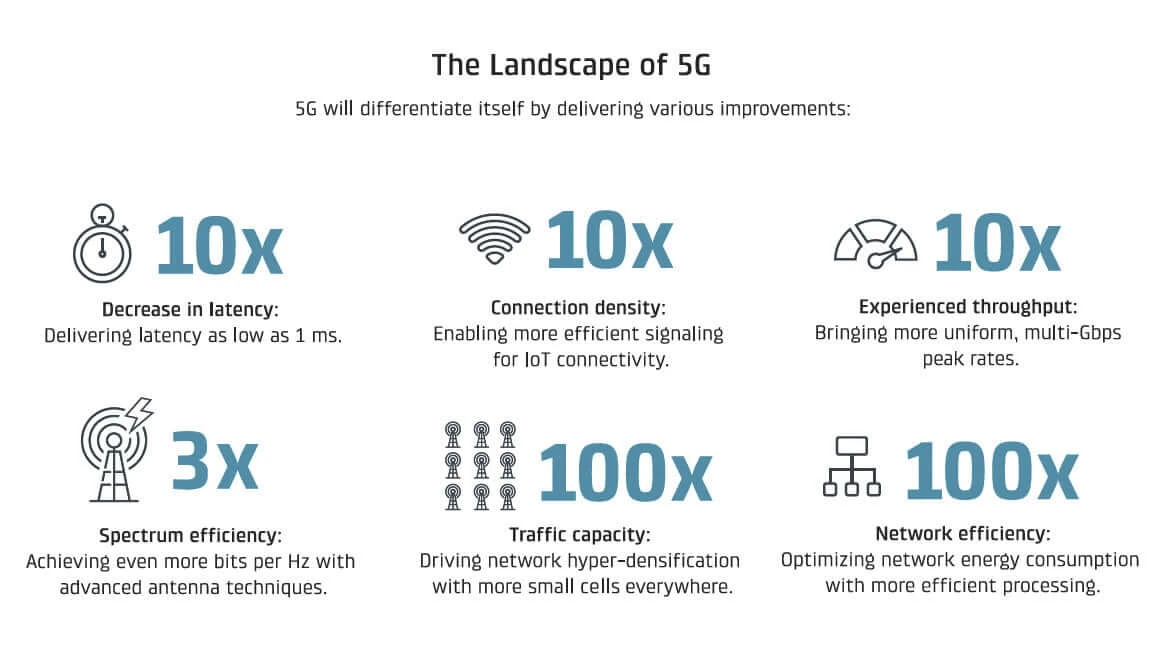

This is where 5G has stepped in, transforming edge computing with ultra-reliable low-latency communications (URLLC). By reducing latency to as little as 1 millisecond, 5G enables immediate responsiveness for real-world applications where every millisecond counts.

While speed is critical to Edge AI solutions, 5G's massive machine-type communications (mMTC) also optimize for scale–thousands of connected devices can operate simultaneously without compromising efficiency. These connected devices, often spread across smart cities or industrial sites, rely on 5G’s ability to maintain low energy use and minimal network congestion. Through network slicing, 5G allocates specific bandwidth and quality of service (QoS) to mission-critical applications, ensuring they always have the resources needed to perform seamlessly, even in data-heavy environments.

Hardware platforms for Edge AI

Choosing the right hardware is critical to the success of Edge AI projects– it impacts everything from development speed to production costs and long-term scalability.

There are several factors to consider:

- Processing power: The ability to handle complex AI models in real-time is crucial. Different AI workloads demand different levels of computing capability.

- Energy efficiency: Many edge devices operate in power-constrained environments, such as remote locations or battery-powered systems. Efficient power usage is essential to ensure longevity without sacrificing processing capabilities.

- Development complexity: The ease of implementing and programming the hardware significantly impacts development time and costs.

- Ecosystem support: Access to development tools, software libraries, and community resources can dramatically accelerate development.

Let's look at some hardware options and how they address these considerations:

Microcontrollers (MCUs)

Microcontrollers represent the simplest path to embedding intelligence at the edge. These single-purpose processors are designed for efficiency and specific tasks, making them ideal for focused applications. MCUs excel in:

- Power efficiency: Their simplified architecture enables long-term operation on battery power

- Cost-effective deployment: Simple design means lower production costs

- Easy implementation: Minimal supporting components required

- Predictable performance: Direct control over processing tasks

MCUs are particularly valuable in distributed systems where you need lightweight inference at many points - like sensor networks analyzing patterns or industrial systems monitoring equipment health. Their efficiency makes them ideal when computing needs are well-defined.

Microprocessors (MPUs)

Microprocessors take edge computing capabilities to the next level. Similar to the processors in your computer, MPUs can run full operating systems like Linux and handle sophisticated AI workloads. This power enables:

- Complex AI models: Run advanced algorithms like computer vision and natural language processing directly on-device

- Multiple simultaneous tasks: Handle various processes while maintaining performance

- Rich software environments: Support for advanced development frameworks and tools

- Flexible applications: Adapt to changing requirements through software updates

However, this power comes with a significant challenge: implementing MPUs in custom hardware is significantly more complex than MCUs, requiring extensive engineering resources and expertise.

Single-board computers (SBCs)

To address the complexity of MPU implementation, single-board computers have emerged as a powerful solution, packaging MPU chips into complete development platforms. Rather than wrestling with complex MPU hardware design, teams can leverage these ready-to-use systems for:

- Rapid development: Start building immediately without hardware design

- Proven reliability: Pre-validated hardware configurations

- Rich ecosystem: Access to existing software libraries and tools

- Scaling flexibility: Use for both development and initial production, switching to custom hardware only when volumes justify the investment

For organizations looking to leverage advanced Edge AI capabilities, SBCs offer a practical path forward. They not only accelerate development but can support significant production volumes, with teams often scaling significantly with SBCs before investing in custom hardware.

When choosing an SBC for Edge AI, consider the trade-offs between performance, customizability, and integration. High-performance solutions offer maximum processing power but come at a premium, customizable options provide flexibility but require more integration effort, and integrated solutions offer a balance of performance and ease of use.

Let's compare three options representing these categories:

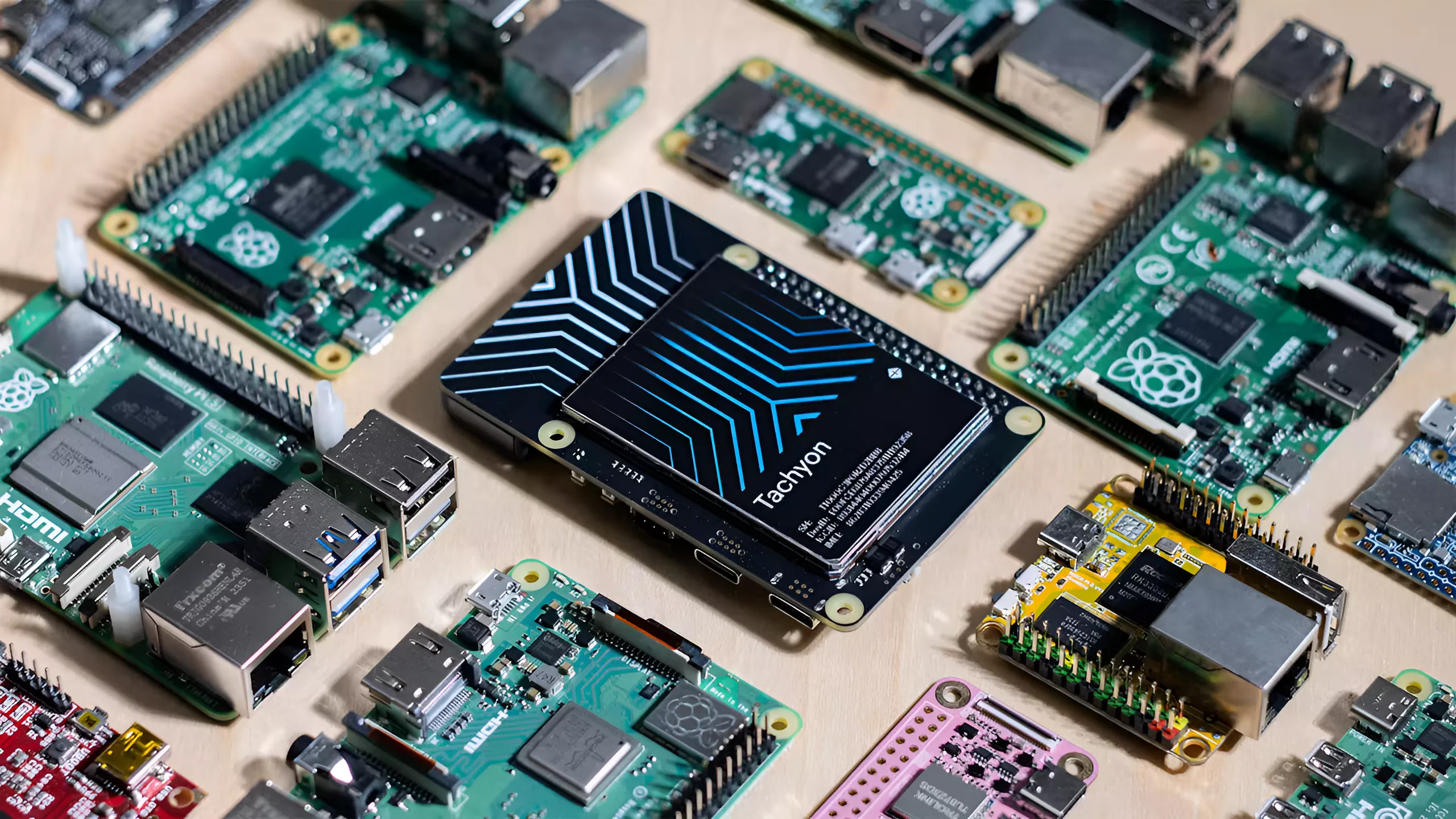

Particle Tachyon

Integrated solution

Particle's Tachyon offers a compelling blend of performance, connectivity, and ease of use, ideal for a wide range of Edge AI applications. Its integrated design combines a powerful processor with a built-in 5G modem and a 12 TOPS AI accelerator, enabling efficient on-device processing for tasks like computer vision and sensor data analysis. By leveraging the Raspberry Pi form factor, Tachyon benefits from a broad ecosystem of accessories and software libraries, simplifying development and accelerating time to market. Its competitive price point and comprehensive feature set, combined with Particle's robust security and scalability, make it an attractive choice for organizations deploying Edge AI solutions.

NVIDIA Jetson AGX Orin Developer Kit

High-performance

The NVIDIA Jetson AGX Orin delivers exceptional AI processing power, making it the platform of choice for compute-intensive Edge AI applications. With up to 275 TOPS of performance, it can handle complex deep learning models and demanding real-time workloads. The Jetson platform offers access to NVIDIA's extensive suite of AI software and libraries, enabling developers to leverage cutting-edge AI capabilities. While it offers remarkable performance, the Jetson AGX Orin comes at a premium price point and requires additional effort for integration due to its lack of built-in connectivity. This makes it best suited for specialized applications where maximum processing power is essential.

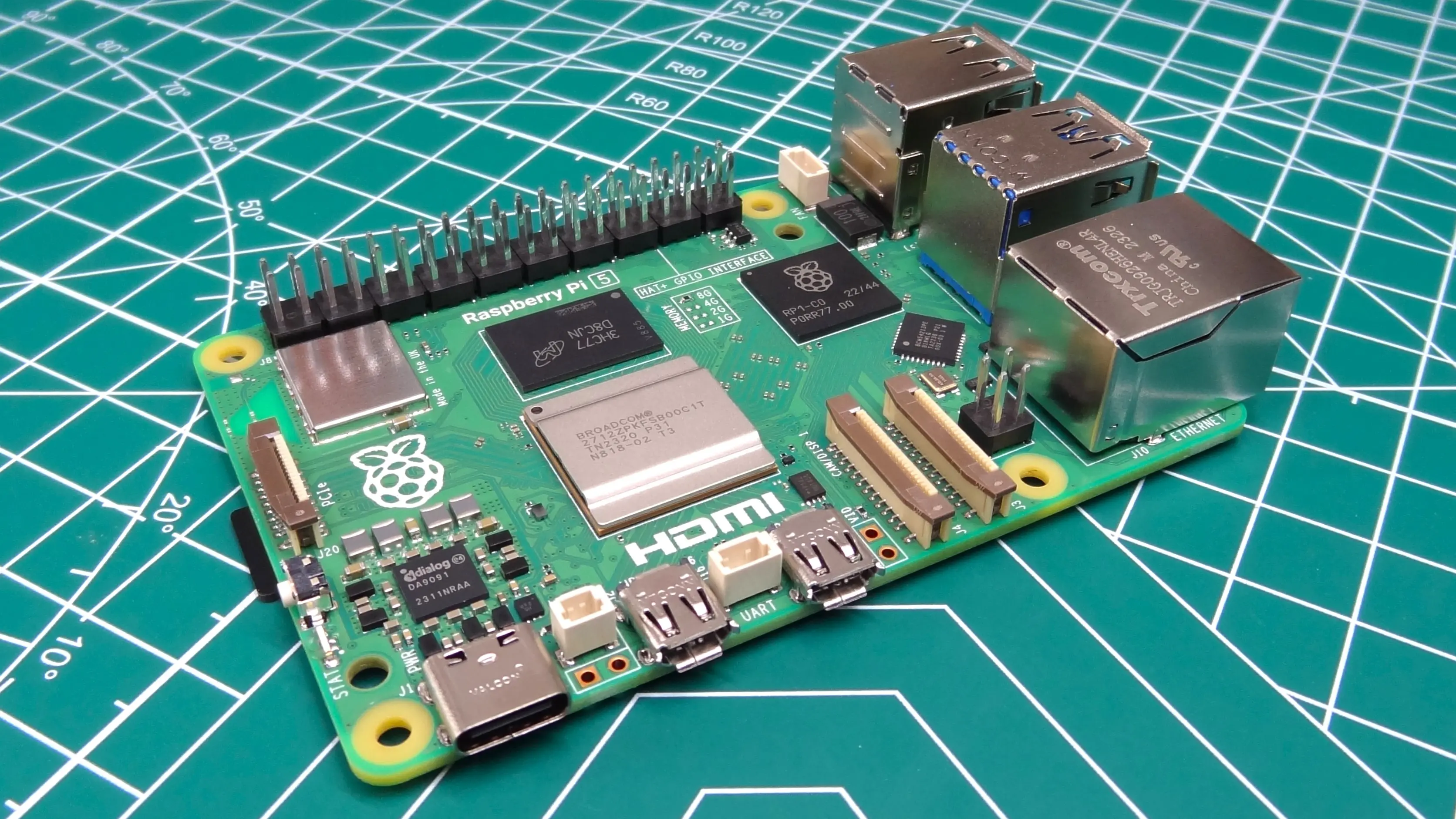

Raspberry Pi 5 with AI accelerator add-ons

Customizable/DIY

The Raspberry Pi 5, combined with various AI accelerator add-ons, provides a flexible and cost-effective entry point for Edge AI development. Its open architecture and extensive community support allow for a high degree of customization, enabling developers to tailor their hardware and software stack to specific project needs. The Raspberry Pi ecosystem offers a wide range of AI accelerators from different vendors, giving developers the freedom to choose the best option for their application. However, this flexibility comes with a trade-off: managing multiple vendors and integrating various components requires additional effort and expertise. It's a solid choice for developers comfortable with hardware integration and seeking a cost-conscious solution for less demanding Edge AI tasks.

| Specs | Particle Tachyon | NVIDIA Jetson AGX Orin Developer Kit | Raspberry Pi 5 with AI Accelerator Add-ons |

|---|---|---|---|

| CPU | Octa-core Qualcomm Kryo (1x 2.7GHz, 3x 2.4GHz, 4x 1.9GHz) | 12-core Arm Cortex-A78AE v8.2 @ 2.2GHz | Broadcom BCM2712, quad-core Arm Cortex-A76 @ 2.4GHz |

| GPU | Adreno 643 | 2048-core NVIDIA Ampere | VideoCore VII |

| AI Accelerator | 12 TOPS AI accelerator | Up to 275 TOPS AI performance (INT8) | Varies by add-on (e.g., Hailo-8 NPU offers up to 26 TOPS AI performance) |

| RAM | 4GB/8GB LPDDR4 | 64GB LPDDR5 | 2GB/4GB/8GB LPDDR4X |

| Storage | 64GB/128GB UFS | 64GB eMMC 5.1 | MicroSD card |

| Wi-Fi | Wi-Fi 6E | Not included (add-ons available) | Dual-band 802.11ac |

| Cellular | 5G sub-6GHz (integrated) | Not included (add-ons available) | Not included (add-ons available) |

| Price | $199 | $1,999 | Starts at $50, plus cost of add-ons |

The choice ultimately depends on your project’s specific needs and priorities. Each SBC offers distinct advantages, allowing developers to select the platform best suited to their performance requirements, development resources, and budget.

Security and privacy considerations for AI at the edge

Unlike centralized systems, edge devices are often distributed across various environments, making them more vulnerable to potential attacks. Maintaining the security of these devices from both digital and physical threats is essential, especially when handling sensitive data.

- Secure boot and firmware updates ensure that edge devices only run trusted software by verifying the integrity of the firmware at startup through cryptographic checks. This prevents malicious code from being executed, reducing the risk of attacks at the device level. Regular firmware updates are also critical, but manual updates can be impractical in distributed edge environments. Over-the-air (OTA) updates allow organizations to remotely push patches and keep devices secure without requiring physical access.

- Data encryption, both at rest and in transit, is crucial for protecting information processed by edge devices. Even if data is intercepted, encryption ensures it remains unreadable without proper authorization.

- Anomaly detection has become integral to edge security. AI models can monitor device behavior for irregularities, flagging any deviations that may indicate a potential breach, allowing organizations to detect attacks early and minimizing damage or data loss.

- Access control and strong authentication mechanisms, such as multi-factor authentication (MFA) or role-based access control, ensure that only authorized personnel can access or manage devices. This is especially important for remotely deployed edge devices, which may otherwise be vulnerable to unauthorized tampering.

From a regulatory standpoint, Edge AI offers distinct advantages for GDPR and CCPA compliance. These frameworks emphasize data minimization and user consent, which Edge AI can support by processing data locally rather than transmitting it to the cloud. By keeping data within the device, exposure to breaches is minimized, ensuring compliance with privacy regulations and protecting user data.

Robust security requires an integrated approach that encompasses both hardware and cloud services. Platforms offering secure device management tools enable remote monitoring, configuration, and updating of edge devices, ensuring they remain secure throughout their lifecycle.

Developing and deploying Edge AI applications

Shipping successful Edge AI applications starts with model selection. Model zoos provide extensive collections of pre-trained models optimized for specific use cases, giving developers a proven foundation to build upon. For Tachyon users, Qualcomm's AI Hub offers models specifically optimized for Qualcomm SoCs, enabling teams to leverage solutions already fine-tuned for their hardware platform.

Once you've selected a model, optimizing its performance for edge devices is essential. Model optimization techniques reduce the model's size and computational demands, enabling faster inference, lower power consumption, and smoother performance on resource-constrained hardware:

- Quantization: Lowers numerical precision, reducing memory and power requirements while maintaining model accuracy

- Pruning: Removes unnecessary model parameters, resulting in smaller, faster models

- Knowledge distillation: Trains smaller models to replicate the performance of larger, complex models, improving efficiency without sacrificing capability

With an optimized model ready, frameworks like TensorFlow Lite and PyTorch Mobile provide the necessary drivers and tools to deploy these models onto edge devices. Containerization then provides consistency across various devices, while CI/CD pipelines automate testing, updates, and maintenance, reducing manual intervention and ensuring models are always optimized.

Ongoing monitoring and maintenance are critical after deployment. Remote management platforms like Particle's edge-to-cloud infrastructure offer:

- Inference time tracking: Monitor model performance to identify bottlenecks and optimize efficiency

- Power consumption metrics: Track energy usage to ensure your application meets power constraints

- OTA (Over-the-Air) updates: Deploy updates and security patches remotely, keeping your devices up-to-date without requiring physical access

By leveraging developer-centric platforms like Particle, which offers pre-configured environments and integrated development tools, deploying Edge AI can be significantly simpler. Seamless integration between edge devices and cloud services through unified edge-to-cloud solutions ensures efficient data management, real-time analytics, and scalability, enabling developers to focus on innovation rather than infrastructure challenges.

Unlocking organizational potential with Edge AI and Particle

Edge AI is quickly redefining IoT, with devices gaining the ability to process data locally and make real-time decisions. Throughout this guide, we’ve explored the critical factors that make this shift possible—from hardware choices to security measures. The advancements in tools, model optimization, and frameworks are allowing IoT systems to operate more efficiently and securely at the edge.

The Particle platform provides integrated, developer-friendly solutions that simplify the deployment of Edge AI, bridging the gap between hardware and cloud services. By streamlining development processes and ensuring seamless edge-to-cloud integration, Particle enables organizations to unlock new possibilities in the IoT landscape.

If you're considering how Edge AI can be integrated into your IoT projects, selecting the right technology is key. To learn more about how Particle can help your organization successfully deploy AI solutions at the edge, reach out to our experts for personalized guidance.