Flockr: An LTE-enabled smart bird feeder

Ready to build your IoT product?

Create your Particle account and get access to:

- Discounted IoT devices

- Device management console

- Developer guides and resources

Background

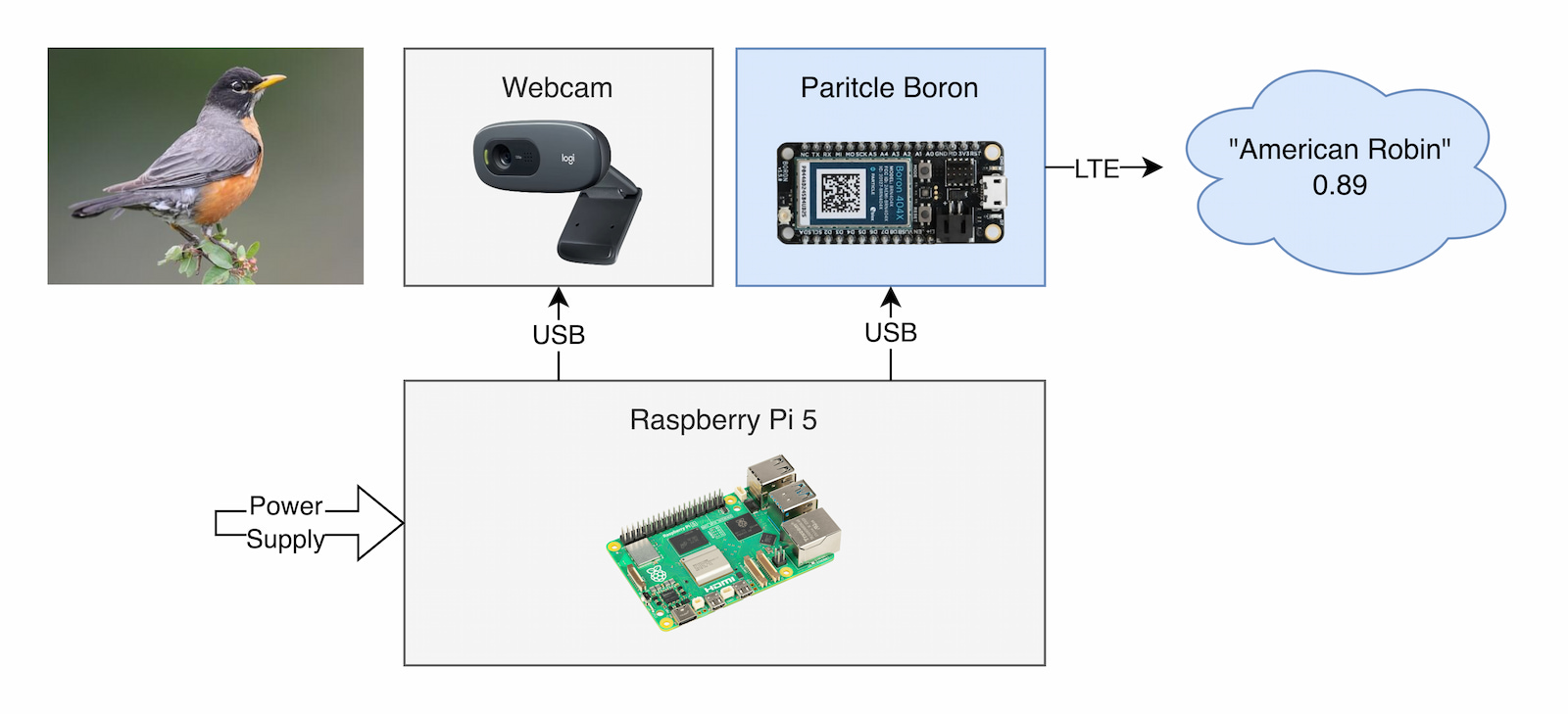

Most existing "smart bird feeders" rely on access to the internet to either perform the classification remotely, or to transmit the results of the classification to the end user. Flockr is a smart feeder project capable of identifying the species of a bird from a video feed without Wi-Fi access. The classification happens directly on the host Raspberry Pi 5. The application uses a TensorFlow Lite model fine tuned for bird species identification and transmits the inference result and a base64 encoded image to the connected Particle Boron. The Boron is responsible for transmitting the result over LTE to the end user. Ideally, Flockr can be deployed remotely while still sending information about what kind of birds are visiting the feeder.

Overview

We'll walk through the app running on the Raspberry Pi and the firmware running on the Boron. The next step in this project would be to configure a Particle integration such as Twilio to forward the inference result to users, but that’s out of scope for this post.

The Raspberry Pi application is written in Python and is containerized with Docker to simplify deployment.

The app running on the RPi manages the following:

- A Flask web server for visualization (if in range of Wi-Fi)

- Classification of a video feed using TFLite

- Transmission of the inference results and image via pyserial

The firmware running on the Boron manages:

- Receiving the inference results and image over its serial port

- Decoding the base64 image using the Base64RK library

- Uploading the image and inference results to the Particle Cloud

Host application

Flockr app is the repository that hosts the code running on the Raspberry Pi. You can get an idea of the container structure by viewing the Dockerfile, it’s relatively simple:

# For more information, please refer to https://aka.ms/vscode-docker-python FROM python:3.9 # Keeps Python from generating .pyc files in the container ENV PYTHONDONTWRITEBYTECODE=1 # Turns off buffering for easier container logging ENV PYTHONUNBUFFERED=1 # For fixing ImportError: libGL.so.1: cannot open shared object file: No such file or directory RUN apt-get update && apt-get install ffmpeg libsm6 libxext6 -y RUN pip install --upgrade pip RUN pip index versions tflite-support COPY requirements.txt . RUN python -m pip install -r requirements.txt WORKDIR /app COPY . /app # Expose port 5000 for Flask EXPOSE 5000 # Set the environment variables for Flask hot reloading ENV FLASK_APP=src/main.py ENV FLASK_ENV=development CMD ["flask", "run", "--host=0.0.0.0", "--port=5000", "--reload"]

The application can be started with docker-compose up --build. The host Raspberry Pi should have Docker and Docker Compose installed prior to trying to run the application. We also recommend plugging in the camera and the Boron prior to starting the container, as we’ve found this increases the odds that the ports are correctly mapped.

If you run into an error that a device is not found (/dev/ttyACM0 or /dev/video0), it’s likely that either your webcam or your Particle device is not connected, or connected to a different port.

The Flask app is configured in src/main.py and hosts /demo and /capture end routes linked via the landing page at /. Flockr uses socketio to pass data between the front end HTML and the Python backend running the inference as shown below:

<script>

var socket = io.connect('http://' + document.domain + ':' + location.port);

socket.on('result', function (data) {

document.getElementById('label').innerText = data?.label ?? "Unknown";

document.getElementById('score').innerText = data?.score ?? 0;

});

</script>

The templates folder hosts the barebones HTML pages and embeds a the video feed:

<img src="{{ url_for('video-capture') }}" width="640" height="480">

Which is injected by specific routes in main.py:

@app.route("/video-capture")

def video_capture():

return Response(

generate_frames(True, socketio), mimetype="multipart/x-mixed-replace; boundary=frame"

)

The magic happens in utils.py with generate_frames.

Here we’re:

Opening up a video stream either from a local test file (if demo) or from a live feed (if capture).

if is_live: cap = cv2.VideoCapture(0) else: cap = cv2.VideoCapture("test-video.mp4") fps = cap.get(cv2.CAP_PROP_FPS) # Get video FPS frame_interval = int(fps) frame_text = ["Unknown"] frame_count = 0 while cap.isOpened(): ret, frame = cap.read() if not ret: print("End of video.") break

Handling fast forward or rewind events from the demo page.

if should_ff == True: current_frame = cap.get(cv2.CAP_PROP_POS_FRAMES) new_frame = current_frame + (10 * frame_interval) cap.set(cv2.CAP_PROP_POS_FRAMES, new_frame) should_ff = False if should_rw == True: current_frame = cap.get(cv2.CAP_PROP_POS_FRAMES) new_frame = current_frame - (10 * frame_interval) new_frame = max(0, new_frame) cap.set(cv2.CAP_PROP_POS_FRAMES, new_frame) should_rw = False

Running the inference every frame_interval.

if frame_count % frame_interval == 0: frame_rgb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB) np_image = format_frame(frame_rgb) categories = classify(np_image) category = categories[0] index = category.index score = category.score display_name = category.display_name

Checking if the confidence level is above our given THRESHOLD and emitting the result to both the serial port and the socketio connection.

if index != 964 and score > THRESHOLD: timestamp = cap.get(cv2.CAP_PROP_POS_MSEC) / 1000 common_name = get_common_name(display_name) if common_name != last_bird_seen: send_image(frame) serial_str = f"INFERENCE={common_name},{score}\n" ser.write(serial_str.encode()) last_bird_seen = common_name result_text = [f"Label: {common_name} Score: {score}"] print(f"Timestamp: {timestamp} seconds, {result_text}") socketio.emit("result", {'label': common_name, 'score': score})

Finally, we pass the frame to the front end for the web view.

yield ( b"--frame\r\n" b"Content-Type: image/jpeg\r\n\r\n" + buffer.tobytes() + b"\r\n" )

There are some additional helper functions to explore, we recommend reading through the utils file to get a good idea of everything that is going on.

Transmitter firmware

With the host application (running on the Pi) spitting out serial data containing the encoded image and inference results, we should be able to this data along to the cloud using the Particle ecosystem.

First, make sure the Particle device is properly configured in your account using the provided setup flow. You can read more about DeviceOS and getting started with the VSCode Workbench extension if this is your first time working with a Particle device.

Clone the Flockr transmitter firmware, then compile and flash it to your board.

Typed publishes require Device OS release 6.3 or greater. As of the time of time of writing, you will need to enable pre-release builds in your Workbench extension.

The transmission firmware uses the Base64RK library to handle the incoming image data.

#include <Base64RK.h>

Then initializes some global variables to manage application state.

CloudEvent imageEvent; CloudEvent inferenceEvent; Variant inferenceData; const int MAX_BUFFER_SIZE = 8192; // Adjust based on your needs String base64Image; uint8_t binaryData[MAX_BUFFER_SIZE]; volatile bool transferComplete = false;

Next, we set up the serial port and reserve memory for the base64 encoded image that we’re expecting from the host application.

void setup()

{

Serial.begin(115200);

base64Image.reserve(MAX_BUFFER_SIZE); // Pre-allocate memory

}

In the main loop we check if a serial stream is available and grab the incoming string.

void loop()

{

if (Serial.available() > 0)

{

String incomingString = Serial.readStringUntil('\n');

incomingString.trim();

The incoming serial data will have the following format.

START

...base64 chunks

END

INFERENCE=American robin&0.89

We can handle the possible coming strings as shown below.

if (incomingString == "START") { Log.info("Starting base64 image reception."); base64Image = ""; // Clear the buffer } else if (incomingString == "END") { Log.info("Completed base64 image reception. Length: %d", base64Image.length()); } else if (incomingString.startsWith("INFERENCE")) { int delimiter = incomingString.indexOf(","); int prefix = incomingString.indexOf("="); String inference = incomingString.substring(prefix + 1, delimiter); String confidence = incomingString.substring(delimiter + 1); inferenceData.set("inference", inference); inferenceData.set("confidence", confidence); Log.info("Inference: %s, Confidence: %s", inference.c_str(), confidence.c_str()); transferComplete = true; } else { base64Image += incomingString; }

Outside of the serialAvailable routine, we check if the transfer as completed. Then, we can decode the base64Image string and fire off a Publish with image data and the inference data.

if (transferComplete) { transferComplete = false; size_t binarySize = base64Image.length() * 3 / 4; Log.info("Base64 image size: %d bytes", (int)binarySize); bool success = Base64::decode(base64Image.c_str(), binaryData, binarySize); if (success) { Log.info("Decoded binary size: %d bytes\n", (int)binarySize); } else { Log.warn("Failed to decode Base64."); } imageEvent.name("image"); imageEvent.data((const char *)binaryData, binarySize, ContentType::JPEG); Particle.publish(imageEvent); inferenceEvent.name("inference"); inferenceEvent.data(inferenceData); Particle.publish(inferenceEvent); }

Finally, we monitor the state of our publish operations.

if (imageEvent.isSent()) { Log.info("Image publish succeeded"); imageEvent.clear(); } else if (!imageEvent.isOk()) { Log.info("Image publish failed error=%d", imageEvent.error()); imageEvent.clear(); } if (inferenceEvent.isSent()) { Log.info("Inference publish succeeded"); inferenceEvent.clear(); } else if (!inferenceEvent.isOk()) { Log.info("Inference publish failed error=%d", inferenceEvent.error()); inferenceEvent.clear(); }

Particle Console

If all works well, you should see the images start to stream through your device details view in the Particle Console. As of Device OS 6.3, you can view the typed image preview in the events log.

Conclusion

Hopefully this project will help you build your own LTE-enabled smart bird feeder. It’s a bit of a different take on what is already out there, providing a simple LTE tether with minimal software and no custom hardware. With a nice project box and extension cord, you’ll be classifying birds in no time!

Ready to get started?

Order your Boron from the store.